In 2016, Andrew J. Vickers and Emily A. Vertosick published an empirical study of race times in recreational endurance runners using data they’d collected from over 2300 runners by means of an internet survey. They came up with a number of interesting conclusions and, even better, they published their data. That means that anyone can poke around in it to confirm the findings published in the paper or try to tease out additional information.

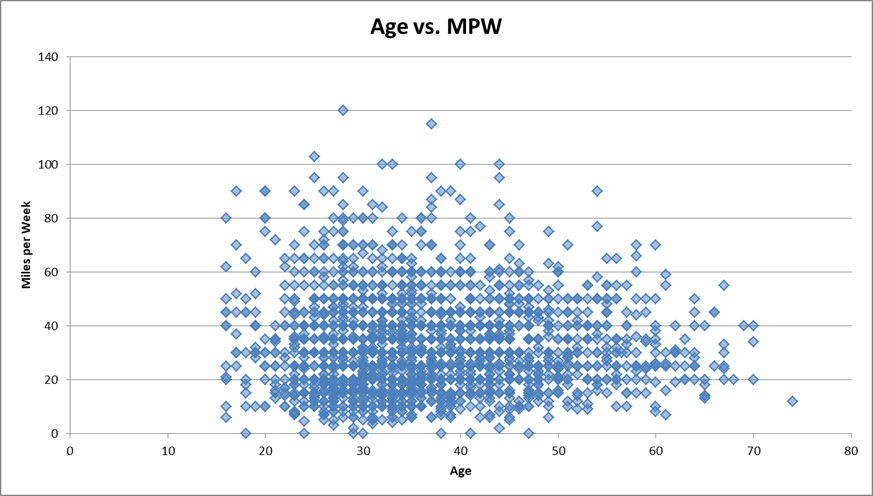

One thing I’ve wondered about was how a runner’s weekly mileage changes over time. So I plotted age versus typical weekly mileage for the runners in the study:

There’s a lot of randomness, but the average weekly total doesn’t change appreciably as runners age, remaining near the average total for all runners in the study (about 32.5 miles per week). There are more runners below the average than above because it’s a lot easier to run 15 miles a week than it is 80, but the farthest outliers are above average because it’s a lot easier to run 80 miles a week than it is to run -17.5.

One thing that stands out to me is how the outliers tend to disappear as the runners get older. We tend to dwell on how we can’t run as far as we age, and you can see that in the data. But you can also see that the number of runners logging extremely low numbers shrinks too. I’m guessing that’s because anyone who’s still running in their 50’s and 60’s likes running enough to run regularly, rather than only going out often enough to run once or twice a year in a local 5K. Also, since there are fewer older runners it’s not unexpected to see fewer outliers of either kind.

As the authors note, the data is self-reported by runners who self-selected themselves, so you can’t be sure how representative the data really is.